Treating AI Like a New Hire: What I Learned

Good context doesn't just inform - it shapes behavior. It's the difference between an AI that needs handholding and one that works autonomously.

I recently wrote about developing 'LLM BS tolerance'. We need to accept that AI will hallucinate, miss context, and need correction. That's still true. But a lot the BS comes because we're asking AI to work blind when we already have the tools and context it needs.

I was treating AI like it should magically know everything, and getting frustrated when it didn't. Why not treat AI like a new joiner? Collecting relevant docs and information, onboarding and giving access to tools?

Through experimentation, I've found context engineering evolves through four stages: Dump everything, Curate context, Structure information, Automate access. Here's how I discovered each one:

Phase 1: Dump everything

I started with Cursor on our DBT project, my first instinct? Give it all the context and let it figure it out.

This quickly hit context limits and produced slow, unfocused responses. The AI was drowning in information.

Phase 2: Curate context

Then I discovered the power of precision. Instead of adding a folder with 100s of SQL files to the context I just pointed the AI to the 2-3 relevant models and say "Look for models upstream if needed.". The AI could navigate from there using its tools. Less context, better results.

Phase 3: Structure information

Curating the context is a manual exercise, having to choose each time which information is relevant for AI. To let AI work more autonomously I focused on creating some structured information that it can use every time. This led me to create an AGENTS.md file with relevant information about the project and our team. Here are some of the headings:

# Team Overview (Teams and responsibilities)

# Project Overview (Stack, domain, primary databases)

# Critical information (Core source tables, seed files, DBT standards)

# Project structure

# Essential commands

# Common patterns

# Environment quick fixes

At the same time, I started running Claude code inside my notes folder, where my project docs/notes and daily logs already lived. I gave Claude code also a CLAUDE.md doc that provides helpful information about me, my work, the team and points the AI to work as my Chief of staff. Here are some of the headings

# My context (Who am I, constraints)

# Repository Overview

# Claude's Role: My chief of staff

# Your workspace (Give full control over the `/chief_of_staff/` folder)

# Core responsibilities

# Operating Principles

# When starting work

# Information flow

# Directory Structure

# Obsidian Patterns (Project links, Frontmatter)

# CLI tools

To understand what makes context effective, I asked AI which parts of my CLAUDE.md were most valuable. Its answers revealed what context engineering really does:

- "Energy is my primary constraint (father of 4yo)" - AI noted this helps it prioritize and protect my time, not just execute tasks blindly.

- "You have agency: Make reasonable decisions without asking. I'll correct if needed" - This shifts AI from waiting for permission to taking initiative within boundaries.

The pattern? Good context doesn't just inform - it shapes behavior. It's the difference between an AI that needs handholding and one that works autonomously.

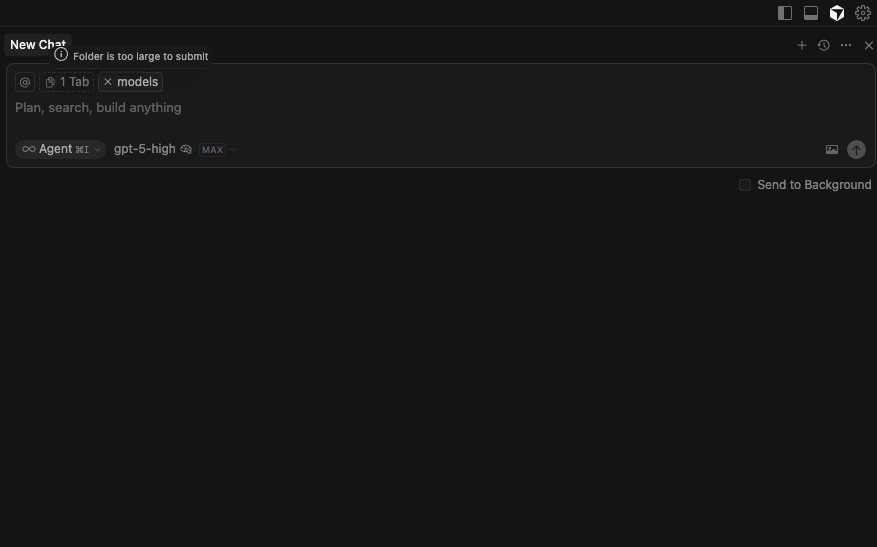

Phase 4: Automate access

The last breakthrough has been the CLI access. Instead of me pulling Jira tickets to paste, I Gave the AI acli access. Instead of explaining Github PRs, it could run gh commands itself. The AI becomes more context self-sufficient.

Here is the example on the CLAUDE.md file about how to use the Atlassian CLI tool:

If I provide you with a Jira URL, you can extract the ticket number and use acli.

For example for url = https://company.atlassian.net/browse/ABC-1234, you can run the following command:acli jira workitem search --jql "key = ABC-1234" --fields "key,summary,assignee,reporter"

You can also add comments when relevant withacli jira workitem comment --jql ...

How I work today

Here is what this looks like in practice. Last week I needed to complete a Jira ticket.

My initial prompt:

You have the following ticket to complete: https://company.atlassian.net/browse/ABC-1234

Please create a new branch called:feature/ABC-1234and attempt to create a PR, make sure the new dbt model works, apply sqlfluff and do some testing with snow CLI commands to make sure it works as expected.

Before creating any code tell me about the plan and I will give you the green light to proceed with the coding once we align on the approach :)

AI's response

Instead of me copy-pasting the Jira ticket, Cursor used acli to pull the full context:

I'll fetch the Jira ticket details via CLI to tailor the plan precisely to ABC-1234 and I’m setting up a short todo list to track this work.

I’m going to pull the Jira ticket details (summary, status, description, labels, components, and comments) using the CLI so I can tailor the plan precisely to ABC-1234.

I'll check acli's Jira commands and authentication status to retrieve the ABC-1234 details correctly.

I'll scan the repo for the existing supervisory org arrays model and any current hierarchy logic to align naming and approach before proposing the detailed plan.

I pulled the Jira details and scoped the work to exactly what ABC-1234 asks. Here’s a tight plan tailored to it.

ACLI command initially failed, but due to the feedback loop and the agent having access to the CLI output it self-resolved the issue by using --help flags. It failed initially since I provided a bad example in the AGENTS.md file 😅

The feedback loop is one of the superpowers of AI agents. They can iterate, try again if something fails, plan and execute autonomously.

It is impressive the agent took this information from the Jira ticket:

This table would provide the current hierarchy, similar to the unified_supervisory_organization_arrays

And it proceeded to scan the repo to find that model and understand how it's build, and based on that prepared a plan.

Then I gave the green light to implement the plan and it just started working on it.

Cursor followed the AGENTS.md file, so it knew the dbt folder structure, naming conventions, CLI tools etc. No extra context/information needed other than a link to a ticket and high level instructions.

Interacting with AI

Notice what changed: I'm not transcribing information or explaining our codebase. I'm giving strategic direction while Cursor handles the execution using the same tools I have access to: acli (Atlassian CLI), dbt, snow (Snowflake CLI), gh (Github CLI).

My energy goes to decisions and direction, not explaining context repeatedly. This is the power of context-engineered AI: like a well-onboarded team member, it's autonomous rather than dependent, letting you focus on strategic rather than tactical work

Conclusion

Context engineering doesn't eliminate AI hallucinations - it just makes them less frequent and easier to catch. You'll still need that BS tolerance I wrote about earlier. The difference is now the BS comes from edge cases, and you have the ability of adjusting context to avoid them in the future.

Context engineering is like training a new member, lots of upfront investment in documentation and process, but then they become rather autonomous, and scale well. To the point where the constraint moves from employees that can code to the capacity/time of AI managers.

I'm not a people manager, but I am still able to manage intelligence, it is nothing short of incredible that technology has led us to this. Many of the same managerial skills apply: clear communication, quality review, and strategic direction. Many senior ICs already have a good base to be AI managers.

However, one of the hardest parts is not the tech setup, it's that effective context is deeply personal. How do you standardize context engineering across a team without losing the personalization that makes it work? This is the next frontier I want to explore.

This isn't a productivity framework or career advice. Just what I've learned from treating AI like a teammate instead of a tool. Your experiments will look different, but they're worth doing.